[ad_1]

In

our pilot research, we draped a skinny, versatile electrode array over the floor of the volunteer’s mind. The electrodes recorded neural alerts and despatched them to a speech decoder, which translated the alerts into the phrases the person supposed to say. It was the primary time a paralyzed one that couldn’t communicate had used neurotechnology to broadcast complete phrases—not simply letters—from the mind.

That trial was the end result of greater than a decade of analysis on the underlying mind mechanisms that govern speech, and we’re enormously happy with what we’ve achieved to this point. However we’re simply getting began.

My lab at UCSF is working with colleagues world wide to make this expertise protected, steady, and dependable sufficient for on a regular basis use at house. We’re additionally working to enhance the system’s efficiency so it will likely be well worth the effort.

How neuroprosthetics work

Neuroprosthetics have come a great distance prior to now twenty years. Prosthetic implants for listening to have superior the furthest, with designs that interface with the

cochlear nerve of the inside ear or straight into the auditory mind stem. There’s additionally appreciable analysis on retinal and mind implants for imaginative and prescient, in addition to efforts to present individuals with prosthetic palms a way of contact. All of those sensory prosthetics take data from the surface world and convert it into electrical alerts that feed into the mind’s processing facilities.

The alternative form of neuroprosthetic information {the electrical} exercise of the mind and converts it into alerts that management one thing within the exterior world, similar to a

robotic arm, a video-game controller, or a cursor on a pc display screen. That final management modality has been utilized by teams such because the BrainGate consortium to allow paralyzed individuals to sort phrases—typically one letter at a time, typically utilizing an autocomplete operate to hurry up the method.

For that typing-by-brain operate, an implant is usually positioned within the motor cortex, the a part of the mind that controls motion. Then the person imagines sure bodily actions to regulate a cursor that strikes over a digital keyboard. One other method, pioneered by a few of my collaborators in a

2021 paper, had one person think about that he was holding a pen to paper and was writing letters, creating alerts within the motor cortex that had been translated into textual content. That method set a brand new report for pace, enabling the volunteer to write down about 18 phrases per minute.

In my lab’s analysis, we’ve taken a extra bold method. As a substitute of decoding a person’s intent to maneuver a cursor or a pen, we decode the intent to regulate the vocal tract, comprising dozens of muscle groups governing the larynx (generally referred to as the voice field), the tongue, and the lips.

I started working on this space greater than 10 years in the past. As a neurosurgeon, I’d usually see sufferers with extreme accidents that left them unable to talk. To my shock, in lots of instances the places of mind accidents didn’t match up with the syndromes I discovered about in medical college, and I noticed that we nonetheless have rather a lot to find out about how language is processed within the mind. I made a decision to check the underlying neurobiology of language and, if attainable, to develop a brain-machine interface (BMI) to revive communication for individuals who have misplaced it. Along with my neurosurgical background, my workforce has experience in linguistics, electrical engineering, pc science, bioengineering, and drugs. Our ongoing medical trial is testing each {hardware} and software program to discover the bounds of our BMI and decide what sort of speech we are able to restore to individuals.

The muscle groups concerned in speech

Speech is among the behaviors that

units people aside. Loads of different species vocalize, however solely people mix a set of sounds in myriad alternative ways to symbolize the world round them. It’s additionally a very difficult motor act—some specialists imagine it’s probably the most complicated motor motion that individuals carry out. Talking is a product of modulated air circulate via the vocal tract; with each utterance we form the breath by creating audible vibrations in our laryngeal vocal folds and altering the form of the lips, jaw, and tongue.

Lots of the muscle groups of the vocal tract are fairly not like the joint-based muscle groups similar to these within the legs and arms, which may transfer in just a few prescribed methods. For instance, the muscle that controls the lips is a sphincter, whereas the muscle groups that make up the tongue are ruled extra by hydraulics—the tongue is basically composed of a set quantity of muscular tissue, so shifting one a part of the tongue adjustments its form elsewhere. The physics governing the actions of such muscle groups is completely completely different from that of the biceps or hamstrings.

As a result of there are such a lot of muscle groups concerned they usually every have so many levels of freedom, there’s basically an infinite variety of attainable configurations. However when individuals communicate, it seems they use a comparatively small set of core actions (which differ considerably in several languages). For instance, when English audio system make the “d” sound, they put their tongues behind their tooth; after they make the “ok” sound, the backs of their tongues go as much as contact the ceiling of the again of the mouth. Few persons are aware of the exact, complicated, and coordinated muscle actions required to say the best phrase.

My analysis group focuses on the elements of the mind’s motor cortex that ship motion instructions to the muscle groups of the face, throat, mouth, and tongue. These mind areas are multitaskers: They handle muscle actions that produce speech and in addition the actions of those self same muscle groups for swallowing, smiling, and kissing.

Learning the neural exercise of these areas in a helpful means requires each spatial decision on the dimensions of millimeters and temporal decision on the dimensions of milliseconds. Traditionally, noninvasive imaging programs have been in a position to present one or the opposite, however not each. Once we began this analysis, we discovered remarkably little information on how mind exercise patterns had been related to even the best parts of speech: phonemes and syllables.

Right here we owe a debt of gratitude to our volunteers. On the UCSF epilepsy middle, sufferers making ready for surgical procedure usually have electrodes surgically positioned over the surfaces of their brains for a number of days so we are able to map the areas concerned after they have seizures. Throughout these few days of wired-up downtime, many sufferers volunteer for neurological analysis experiments that make use of the electrode recordings from their brains. My group requested sufferers to allow us to research their patterns of neural exercise whereas they spoke phrases.

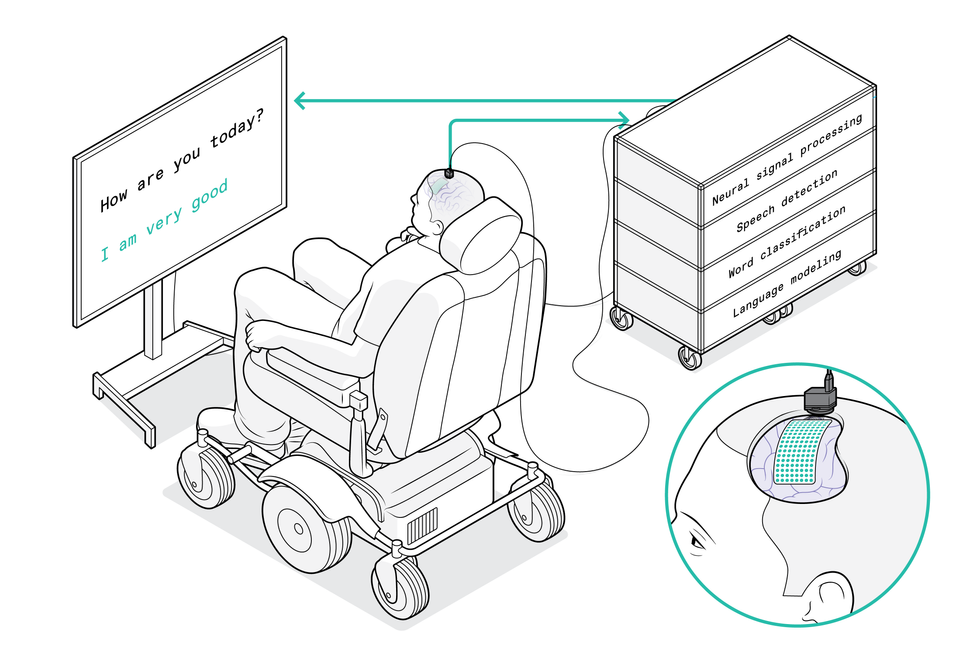

The {hardware} concerned is known as

electrocorticography (ECoG). The electrodes in an ECoG system don’t penetrate the mind however lie on the floor of it. Our arrays can include a number of hundred electrode sensors, every of which information from 1000’s of neurons. To this point, we’ve used an array with 256 channels. Our purpose in these early research was to find the patterns of cortical exercise when individuals communicate easy syllables. We requested volunteers to say particular sounds and phrases whereas we recorded their neural patterns and tracked the actions of their tongues and mouths. Typically we did so by having them put on coloured face paint and utilizing a computer-vision system to extract the kinematic gestures; different instances we used an ultrasound machine positioned below the sufferers’ jaws to picture their shifting tongues.

We used these programs to match neural patterns to actions of the vocal tract. At first we had plenty of questions in regards to the neural code. One risk was that neural exercise encoded instructions for explicit muscle groups, and the mind basically turned these muscle groups on and off as if urgent keys on a keyboard. One other concept was that the code decided the speed of the muscle contractions. Yet one more was that neural exercise corresponded with coordinated patterns of muscle contractions used to supply a sure sound. (For instance, to make the “aaah” sound, each the tongue and the jaw have to drop.) What we found was that there’s a map of representations that controls completely different elements of the vocal tract, and that collectively the completely different mind areas mix in a coordinated method to present rise to fluent speech.

The function of AI in at this time’s neurotech

Our work relies on the advances in synthetic intelligence over the previous decade. We will feed the information we collected about each neural exercise and the kinematics of speech right into a neural community, then let the machine-learning algorithm discover patterns within the associations between the 2 information units. It was attainable to make connections between neural exercise and produced speech, and to make use of this mannequin to supply computer-generated speech or textual content. However this method couldn’t prepare an algorithm for paralyzed individuals as a result of we’d lack half of the information: We’d have the neural patterns, however nothing in regards to the corresponding muscle actions.

The smarter means to make use of machine studying, we realized, was to interrupt the issue into two steps. First, the decoder interprets alerts from the mind into supposed actions of muscle groups within the vocal tract, then it interprets these supposed actions into synthesized speech or textual content.

We name this a biomimetic method as a result of it copies biology; within the human physique, neural exercise is straight answerable for the vocal tract’s actions and is simply not directly answerable for the sounds produced. A giant benefit of this method comes within the coaching of the decoder for that second step of translating muscle actions into sounds. As a result of these relationships between vocal tract actions and sound are pretty common, we had been in a position to prepare the decoder on massive information units derived from individuals who weren’t paralyzed.

A medical trial to check our speech neuroprosthetic

The following huge problem was to carry the expertise to the individuals who might actually profit from it.

The Nationwide Institutes of Well being (NIH) is funding

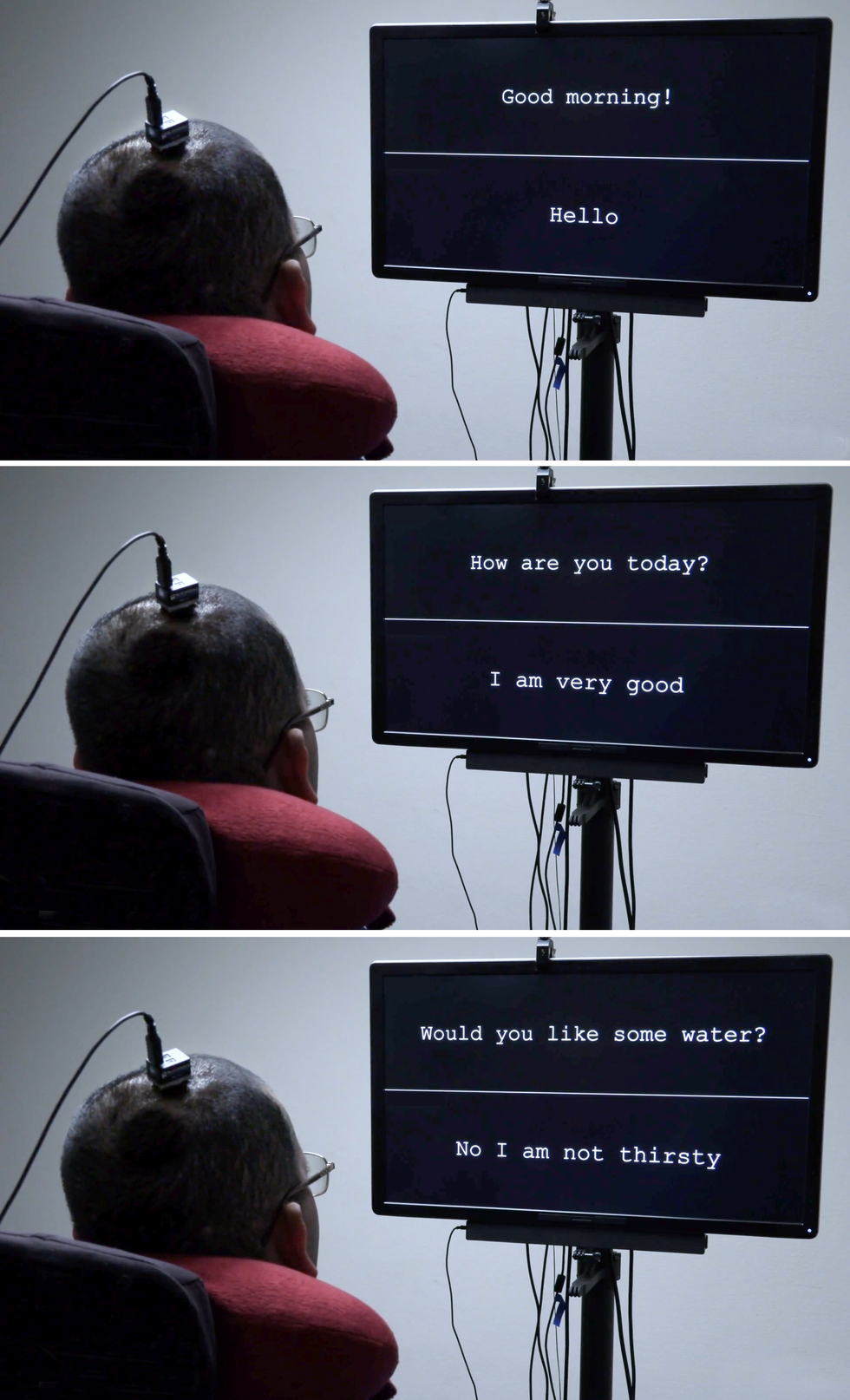

our pilot trial, which started in 2021. We have already got two paralyzed volunteers with implanted ECoG arrays, and we hope to enroll extra within the coming years. The first purpose is to enhance their communication, and we’re measuring efficiency by way of phrases per minute. A median grownup typing on a full keyboard can sort 40 phrases per minute, with the quickest typists reaching speeds of greater than 80 phrases per minute.

We predict that tapping into the speech system can present even higher outcomes. Human speech is way sooner than typing: An English speaker can simply say 150 phrases in a minute. We’d prefer to allow paralyzed individuals to speak at a charge of 100 phrases per minute. We’ve got plenty of work to do to achieve that purpose, however we predict our method makes it a possible goal.

The implant process is routine. First the surgeon removes a small portion of the cranium; subsequent, the versatile ECoG array is gently positioned throughout the floor of the cortex. Then a small port is fastened to the cranium bone and exits via a separate opening within the scalp. We at present want that port, which attaches to exterior wires to transmit information from the electrodes, however we hope to make the system wi-fi sooner or later.

We’ve thought-about utilizing penetrating microelectrodes, as a result of they will report from smaller neural populations and will due to this fact present extra element about neural exercise. However the present {hardware} isn’t as strong and protected as ECoG for medical functions, particularly over a few years.

One other consideration is that penetrating electrodes usually require day by day recalibration to show the neural alerts into clear instructions, and analysis on neural units has proven that pace of setup and efficiency reliability are key to getting individuals to make use of the expertise. That’s why we’ve prioritized stability in

making a “plug and play” system for long-term use. We performed a research wanting on the variability of a volunteer’s neural alerts over time and located that the decoder carried out higher if it used information patterns throughout a number of periods and a number of days. In machine-learning phrases, we are saying that the decoder’s “weights” carried over, creating consolidated neural alerts.

College of California, San Francisco

As a result of our paralyzed volunteers can’t communicate whereas we watch their mind patterns, we requested our first volunteer to strive two completely different approaches. He began with a listing of fifty phrases which might be useful for day by day life, similar to “hungry,” “thirsty,” “please,” “assist,” and “pc.” Throughout 48 periods over a number of months, we typically requested him to only think about saying every of the phrases on the record, and typically requested him to overtly

strive to say them. We discovered that makes an attempt to talk generated clearer mind alerts and had been ample to coach the decoding algorithm. Then the volunteer might use these phrases from the record to generate sentences of his personal selecting, similar to “No I’m not thirsty.”

We’re now pushing to broaden to a broader vocabulary. To make that work, we have to proceed to enhance the present algorithms and interfaces, however I’m assured these enhancements will occur within the coming months and years. Now that the proof of precept has been established, the purpose is optimization. We will give attention to making our system sooner, extra correct, and—most vital— safer and extra dependable. Issues ought to transfer rapidly now.

In all probability the most important breakthroughs will come if we are able to get a greater understanding of the mind programs we’re making an attempt to decode, and the way paralysis alters their exercise. We’ve come to appreciate that the neural patterns of a paralyzed one that can’t ship instructions to the muscle groups of their vocal tract are very completely different from these of an epilepsy affected person who can. We’re making an attempt an bold feat of BMI engineering whereas there’s nonetheless heaps to be taught in regards to the underlying neuroscience. We imagine it is going to all come collectively to present our sufferers their voices again.

From Your Web site Articles

Associated Articles Across the Internet

[ad_2]